- RAG with Azure OpenAI allows developers to use supported AI chat models that can reference specific sources of information to ground the response.

- Adding this information allows the model to reference both the specific data provided and its pretrained knowledge to provide more effective responses.

- Azure OpenAI enables RAG by connecting pretrained models to your own data sources.

- Azure OpenAI on your data utilizes the search ability of Azure AI Search to add the relevant data chunks to the prompt.

- Once your data is in a AI Search index, Azure OpenAI on your data goes through the following steps:

- Receive user prompt.

- Determine relevant content and intent of the prompt.

- Query the search index with that content and intent.

- Insert search result chunk into the Azure OpenAI prompt, along with system message and user prompt.

- Send entire prompt to Azure OpenAI.

- Return response and data reference (if any) to the user.

- Fine-tuning vs. RAG

- Fine-tuning is a technique used to create a custom model by training an existing foundational model such as gpt-35-turbo with a dataset of additional training data.

- Fine-tuning can result in higher quality requests than prompt engineering alone,

- customize the model on examples larger than can fit in a prompt,

- and allow the user to provide fewer examples to get the same high quality response.

- However, the process for fine-tuning is both costly and time intensive, and should only be used for use cases where it's necessary.

- RAG with Azure OpenAI on your data still uses the stateless API to connect to the model, which removes the requirement of training a custom model with your data and simplifies the interaction with the AI model.

- AI Search first finds the useful information to answer the prompt, adds that to the prompt as grounding data, and Azure OpenAI forms the response based on that information.

- Fine-tuning is a technique used to create a custom model by training an existing foundational model such as gpt-35-turbo with a dataset of additional training data.

- Adding your data is done through the Azure AI Studio, in the Chat playground, or by specifying your data source in an API call.

- The data source you add is then used to augment the prompt sent to the model.

- When setting up your data in the studio, you can choose to upload your data files, use data in a blob storage account, or connect to an existing AI Search index.

- Azure OpenAI on your data supports .md, .txt, .html, .pdf, and MS Word or PowerPoint files.

- Graphics or images files, the response quality depends on how well text can be extracted from the visual content.

- It's recommended to use the Azure AI Studio to create the search resource and index.

- Adding data this way allows the appropriate chunking to happen when inserting into the index, yielding better responses.

- If you're using large text files or forms, you should use the available data preparation script to improve the AI model's accuracy.

- Enabling semantic search for your AI Search service can improve the result of searching your data index and you're likely to receive higher quality responses and citations. However, enabling semantic search may increase the cost of the search service.

- Use the wizard in your AI Search resource to vectorize your data appropriately. It takes a few extra steps compared to doing so in AI Studio, however serves as a good example of using the RAG pattern with an existing dataset.

- Connect your data -> Chat playground -> Add your data tab -> Add a data source button -> setting up the connection to each data source, and getting that data into a search index.

- Using the wizard in AI Studio to create and connect your data source, need to create a hub and a project.

- AI Studio will walk you through doing that, or refer to the AI Studio documentation.

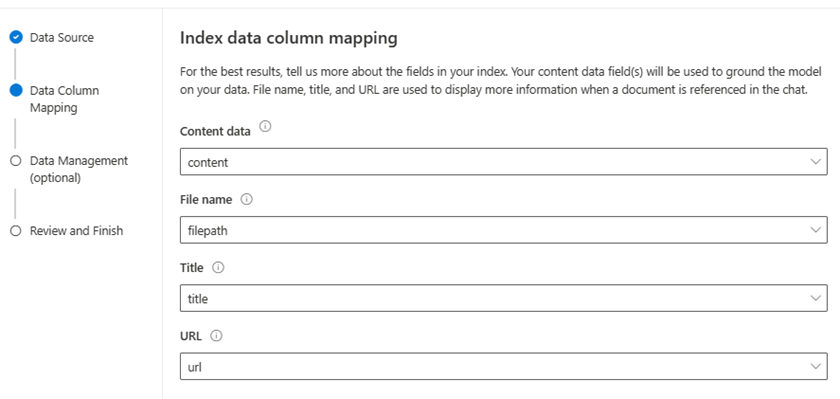

- If you're using your own index that wasn't created through Azure AI Studio, one of the pages allows you to specify your column mapping.

- It's important to provide accurate fields, to enable the model to provide a better response, especially for Content data.

-

- Token considerations and recommended settings

- Each call to the model includes tokens for the system message, the user prompt, conversation history, retrieved search documents, internal prompts, and the model's response.

- The system message, for example, is a useful reference for instructions for the model and is included with every call.

- While there's no token limit for the system message, when using your own data the system message gets truncated if it exceeds the model's token limit (which varies per model, from 400 to 4000 tokens).

- The response from the model is also limited when using your own data is 1500 tokens.

- Due to these token limitations, it's recommended that you limit both the question length and the conversation history length in your call.

- Prompt engineering techniques such as breaking down the task and chain of thought prompting can help the model respond more effectively.

- Using the API - Specify the data source where your data is stored. With each call you need to include the endpoint, key, and indexName for your AI Search resource.

{

"dataSources": [

{

"type": "AzureCognitiveSearch",

"parameters": {

"endpoint": "<your_search_endpoint>",

"key": "<your_search_endpoint>",

"indexName": "<your_search_index>"

}

}

],

"messages":[

{

"role": "system",

"content": "You are a helpful assistant assisting users with travel recommendations."

},

{

"role": "user",

"content": "I want to go to New York. Where should I stay?"

}

]

}

- The call when using your own data needs to be sent to a different endpoint than is used when calling a base model, which includes extensions. The request will also need to include the Content-Type and api-key.

<your_azure_openai_resource>/openai/deployments/<deployment_name>/chat/completions?api-version=<version>

Lab - https://microsoftlearning.github.io/mslearn-openai/Instructions/Exercises/06-use-own-data.html

-

What does RAG with Azure OpenAI enable developers to do?

- Create their own AI chat models

- Access Azure OpenAI without an approved subscription

- Use supported AI chat models that can reference specific sources of data - Correct. Azure OpenAI on your data allows developers to use supported AI chat models that can reference specific sources of data to ground the response.

-

What is the recommended way to add data when using Azure OpenAI on your data?

- Using any data source option available for Azure OpenAI on your data.

- Using Azure AI Studio to create the search resource and index. - Correct. Using Azure AI Studio allows the appropriate chunking to happen when inserting into the index, yielding better responses.

- Connecting to files in a storage account without using Azure AI Studio.

-

What are some recommended prompt engineering techniques when using RAG with Azure OpenAI on your own data?

- Break down the task and use chain of thought prompting. - Correct. Breaking down the task and using chain of thought prompting can help the model respond more effectively within the token limit.

- Include as much conversation history as possible in your call.

- Use a single long prompt to provide all necessary information.