Below are image and audio (narrated mp3) samples extracted from the matlok datasets. These samples provide an overview for how the images look, and how the mp3s are structured with an answer and a question from the image's knowledge graph text box.

Welcome to the matlok multimodal python copilot training datasets. This is an overview for our training and fine-tuning datasets found below:

- ~2.35M unique source code rows

- ~1.7M instruct alpaca yaml text rows

- ~923K png knowledge graph images with alpaca text description

- ~410K mp3s for ~2 years of continuous audio playtime

- requires 1.2 TB storage on disk

Please reach out if you find an issue or want help with a similar dataset. We want to make it easier to create and share large datasets: hello@matlok.ai

These are knowledge graphs created for training generative ai models on how writing python CLIP transformer code by understanding an overview on:

- classes

- base classes for inheritance and polymorphism

- global functions

- imports

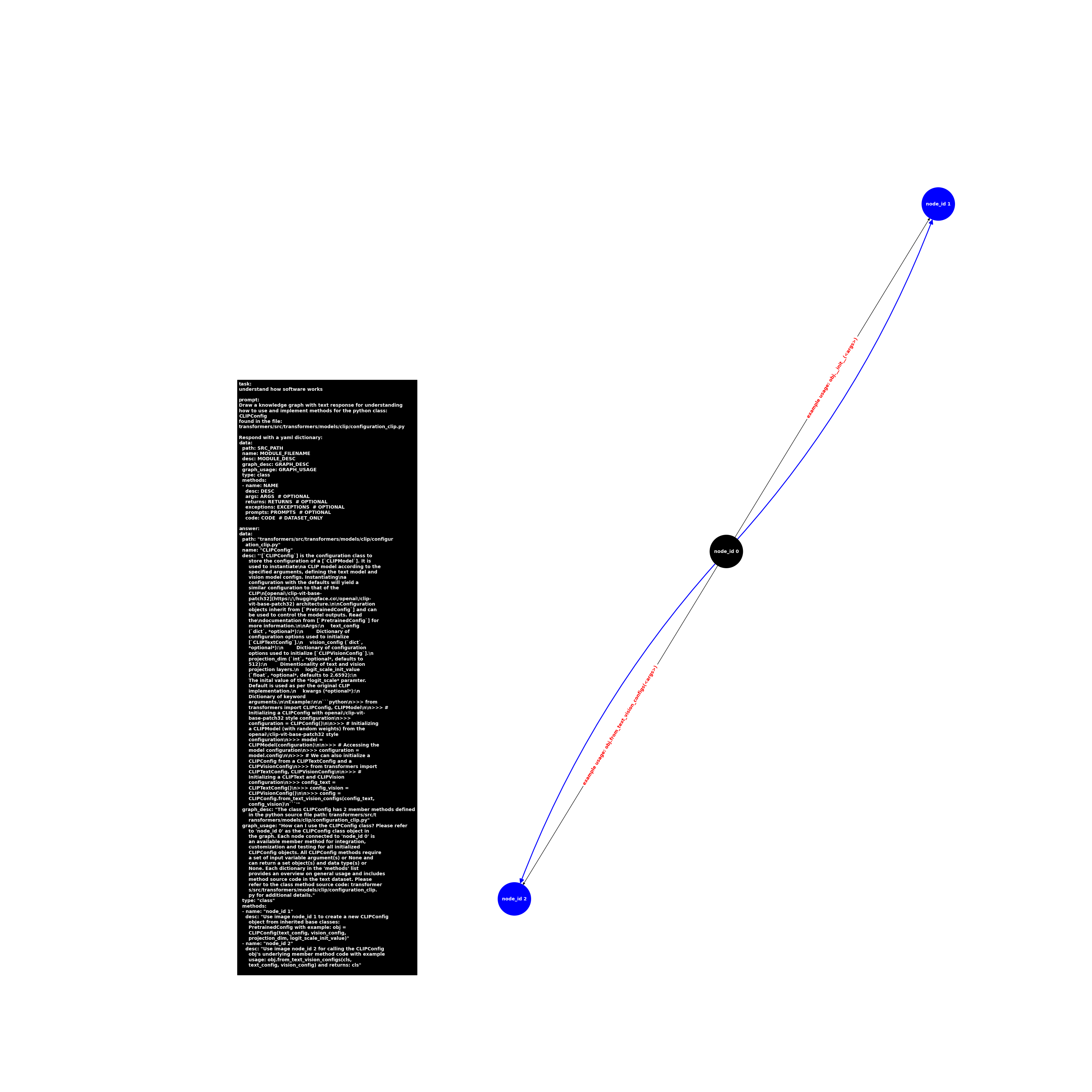

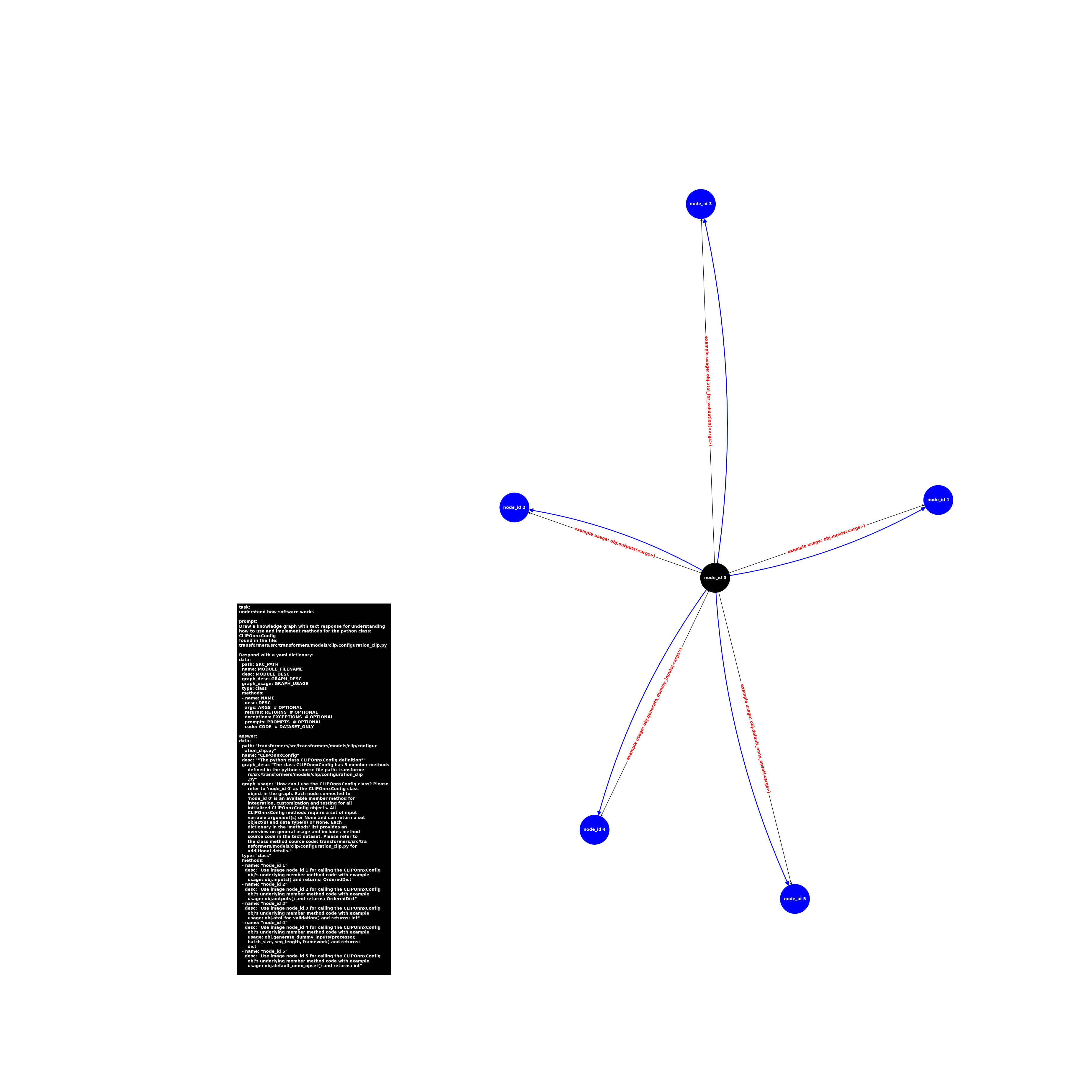

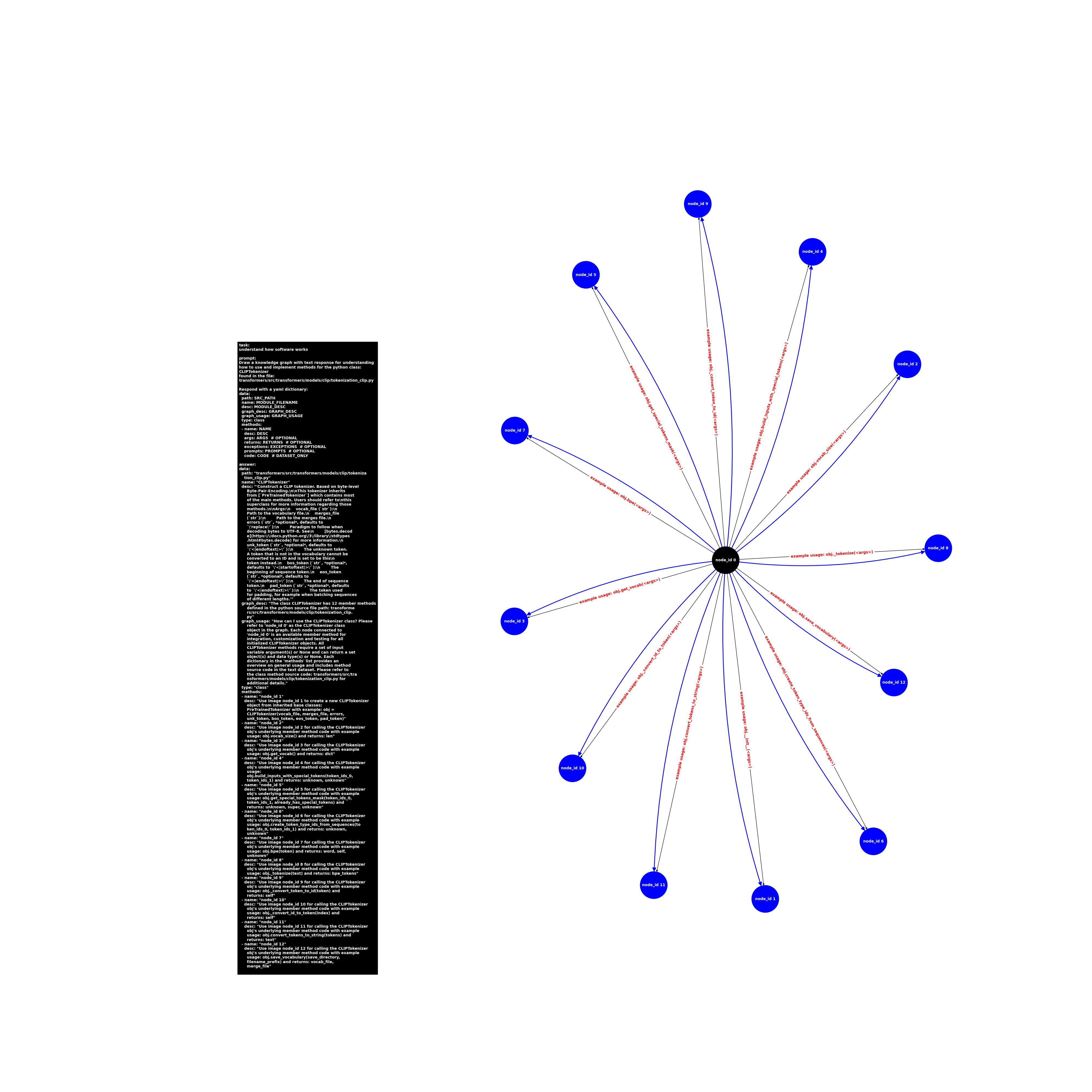

Here are samples from the python copilot class image knowledge graph dataset (304 GB). These images attempt to teach how to use software with a networkx graph saved as a png with an alpaca text box:

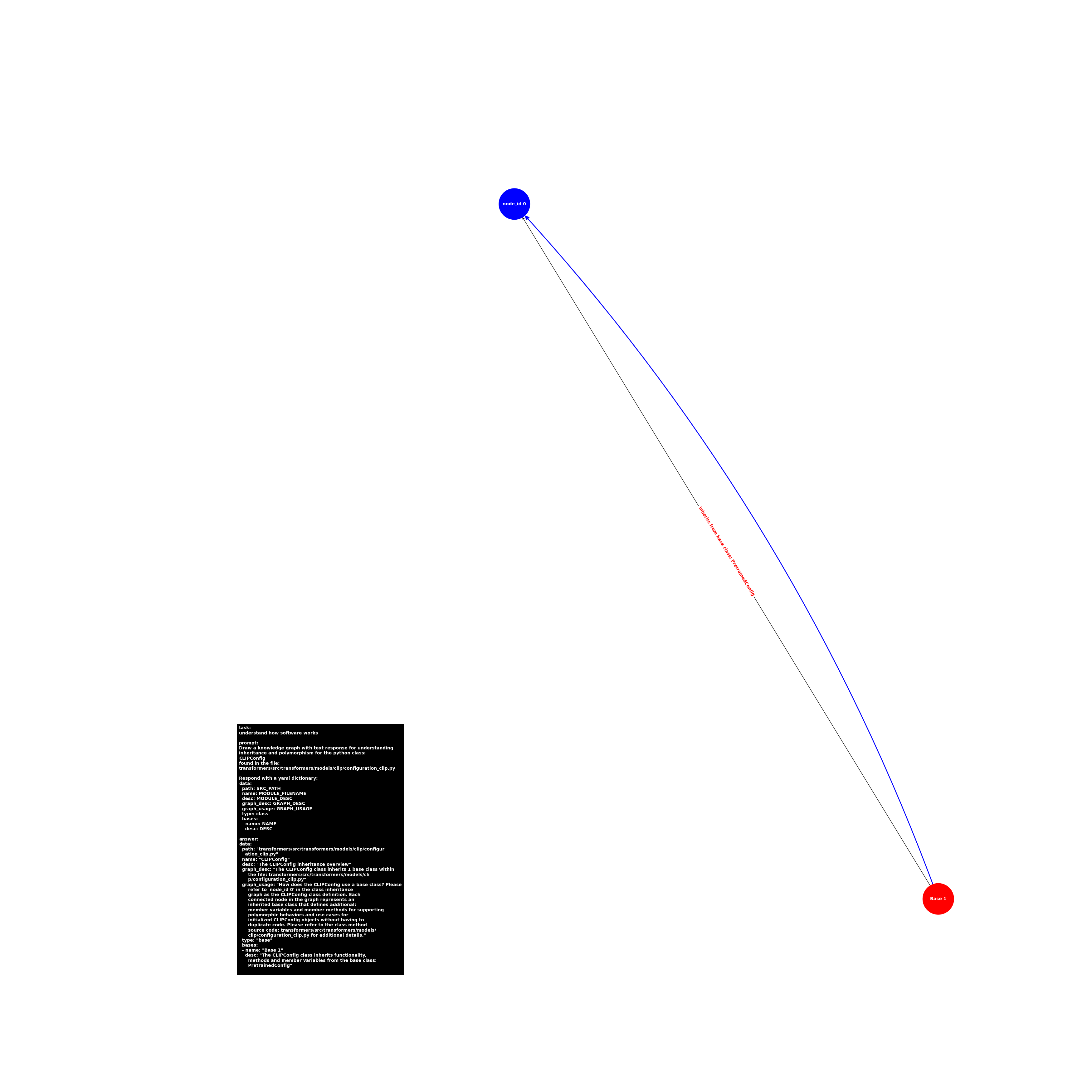

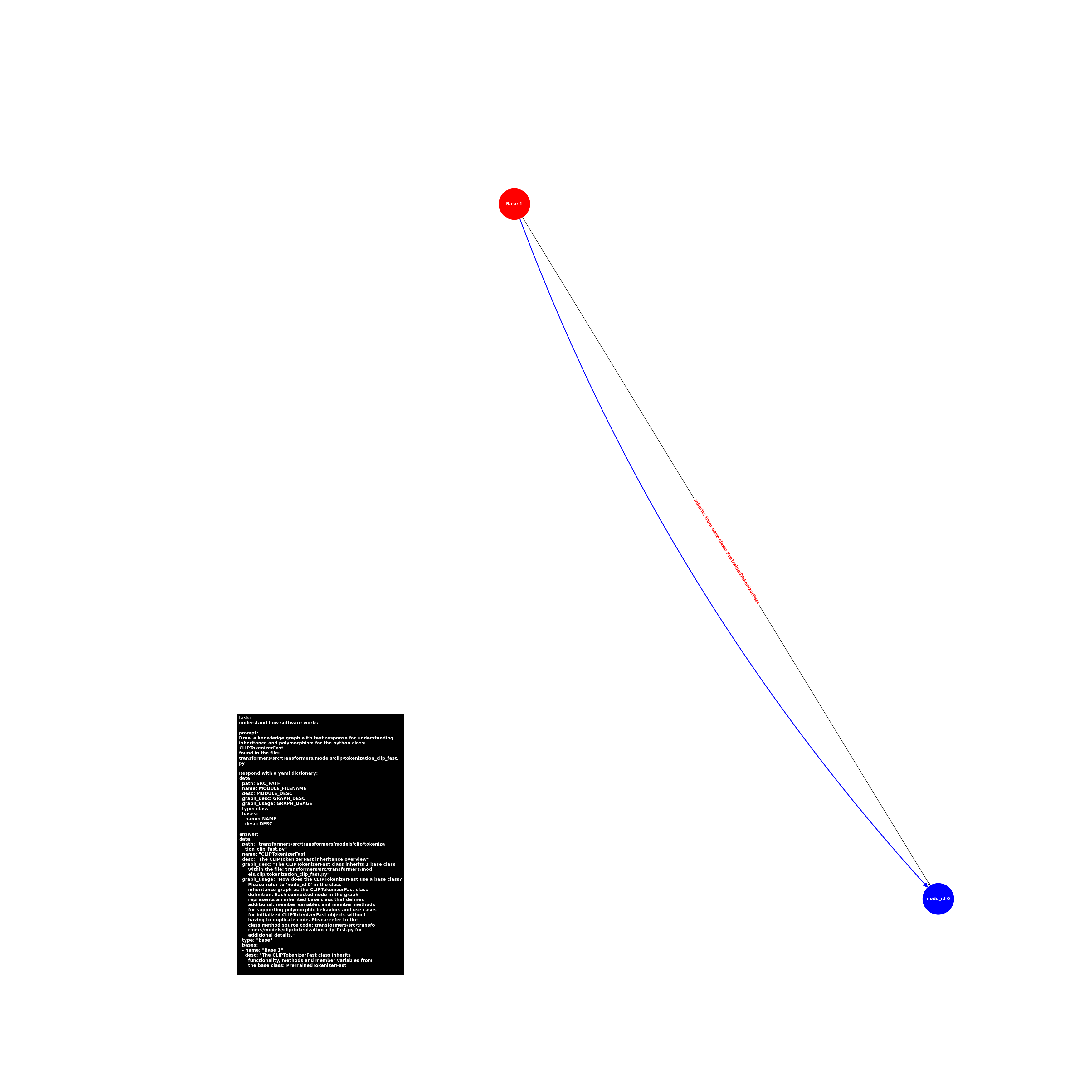

Here are samples from the python copilot base class inheritance and polymorphism image knowledge graph dataset (135 GB). These images attempt to teach how to use software with a networkx graph saved as a png with an alpaca text box:

How to use the transformers/src/transformers/models/clip/configuration_clip.py CLIPConfig inherited base class(es)

How to use the transformers/src/transformers/models/clip/tokenization_clip_fast.py CLIPTokenizerFast inherited base class(es)

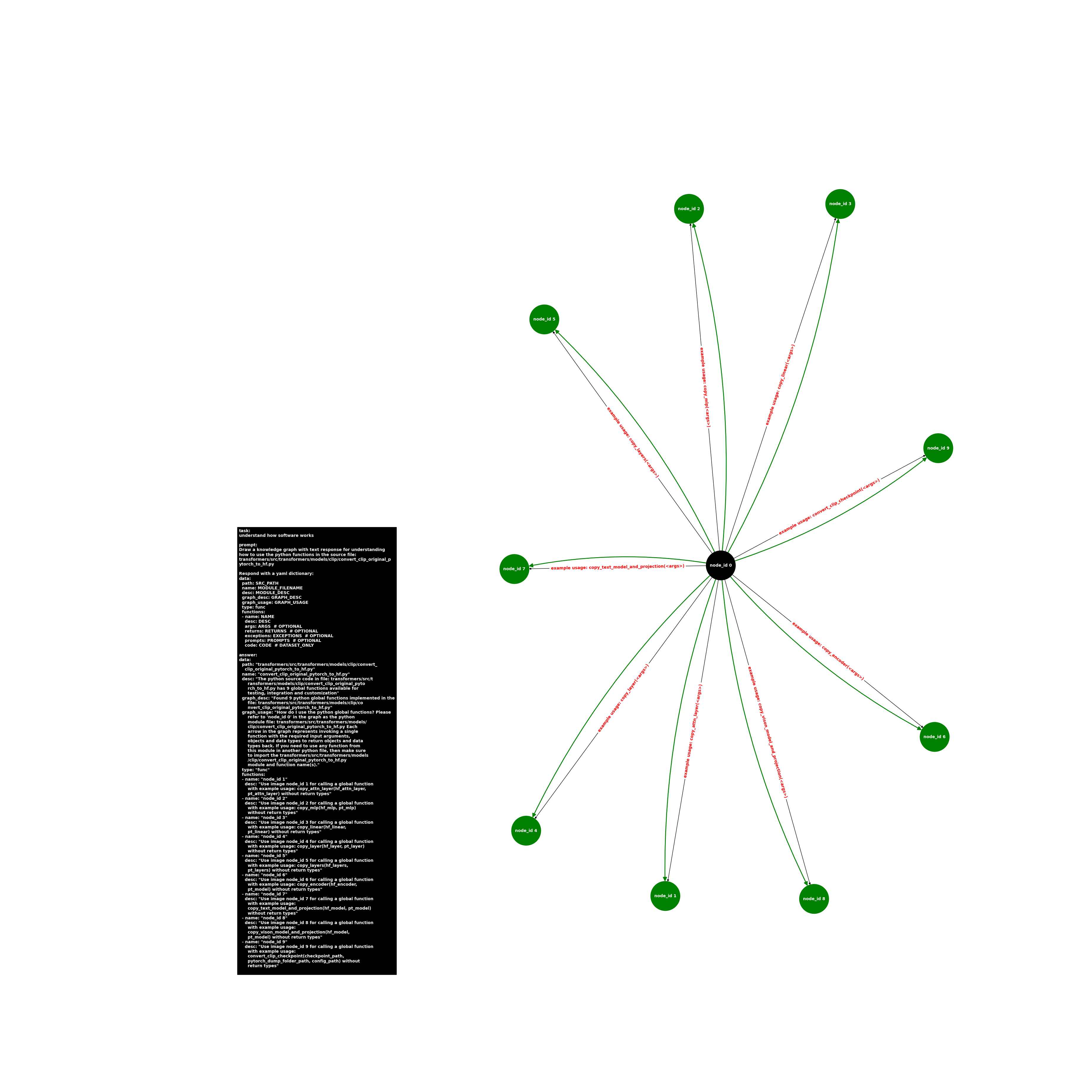

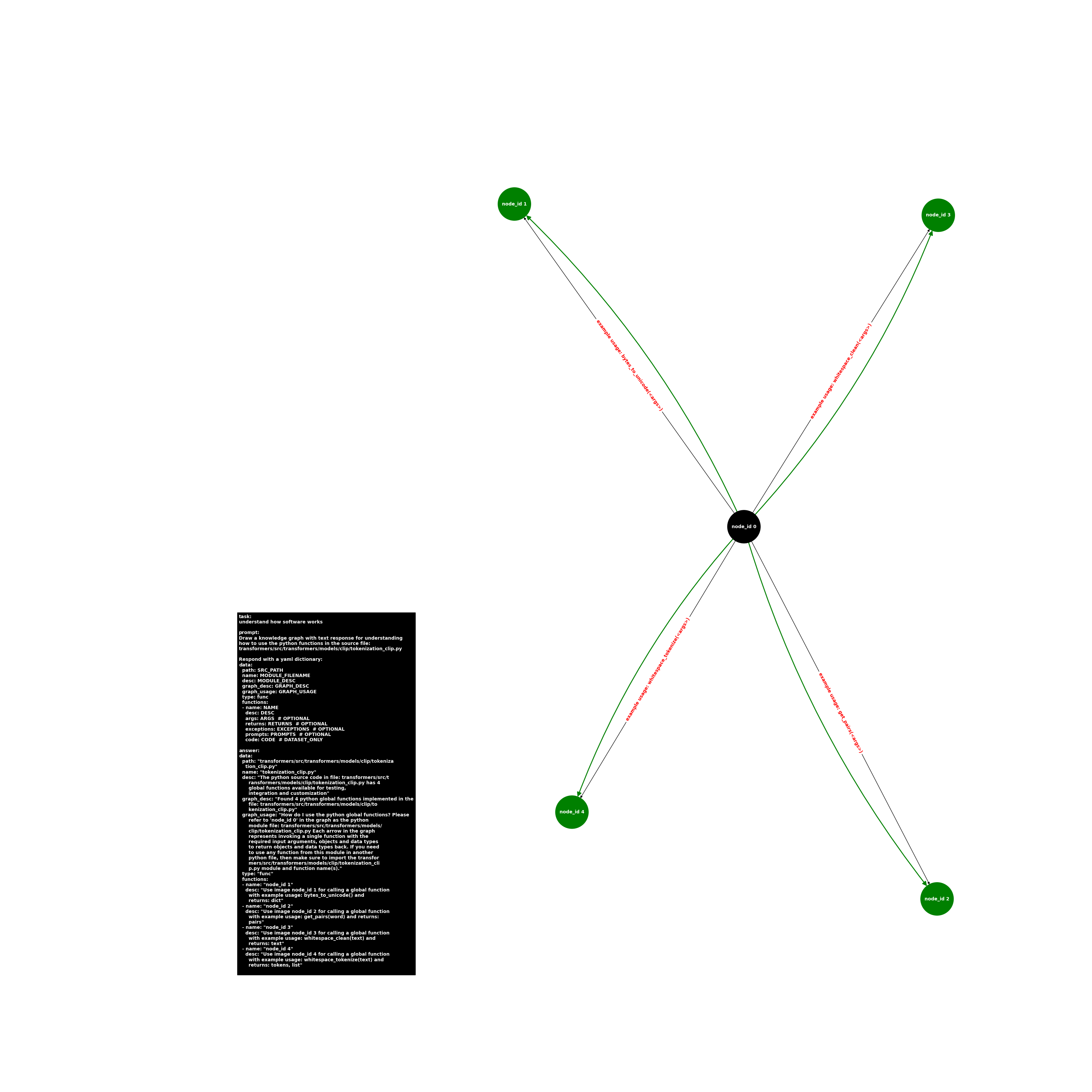

Here are samples from the python copilot global functions image knowledge graph dataset (130 GB). These images attempt to teach how to use software with a networkx graph saved as a png with an alpaca text box:

How to use the transformers/src/transformers/models/clip/convert_clip_original_pytorch_to_hf.py global functions

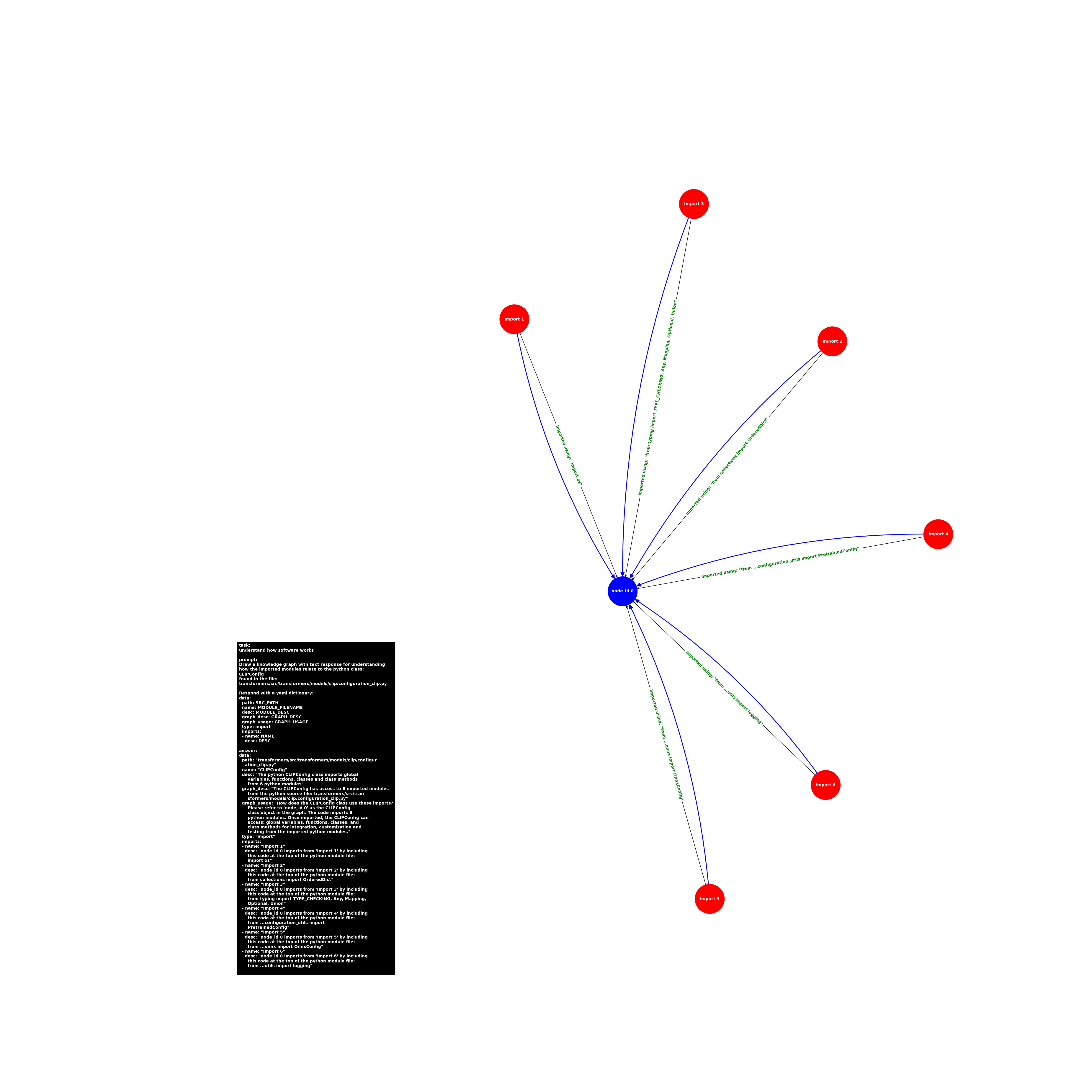

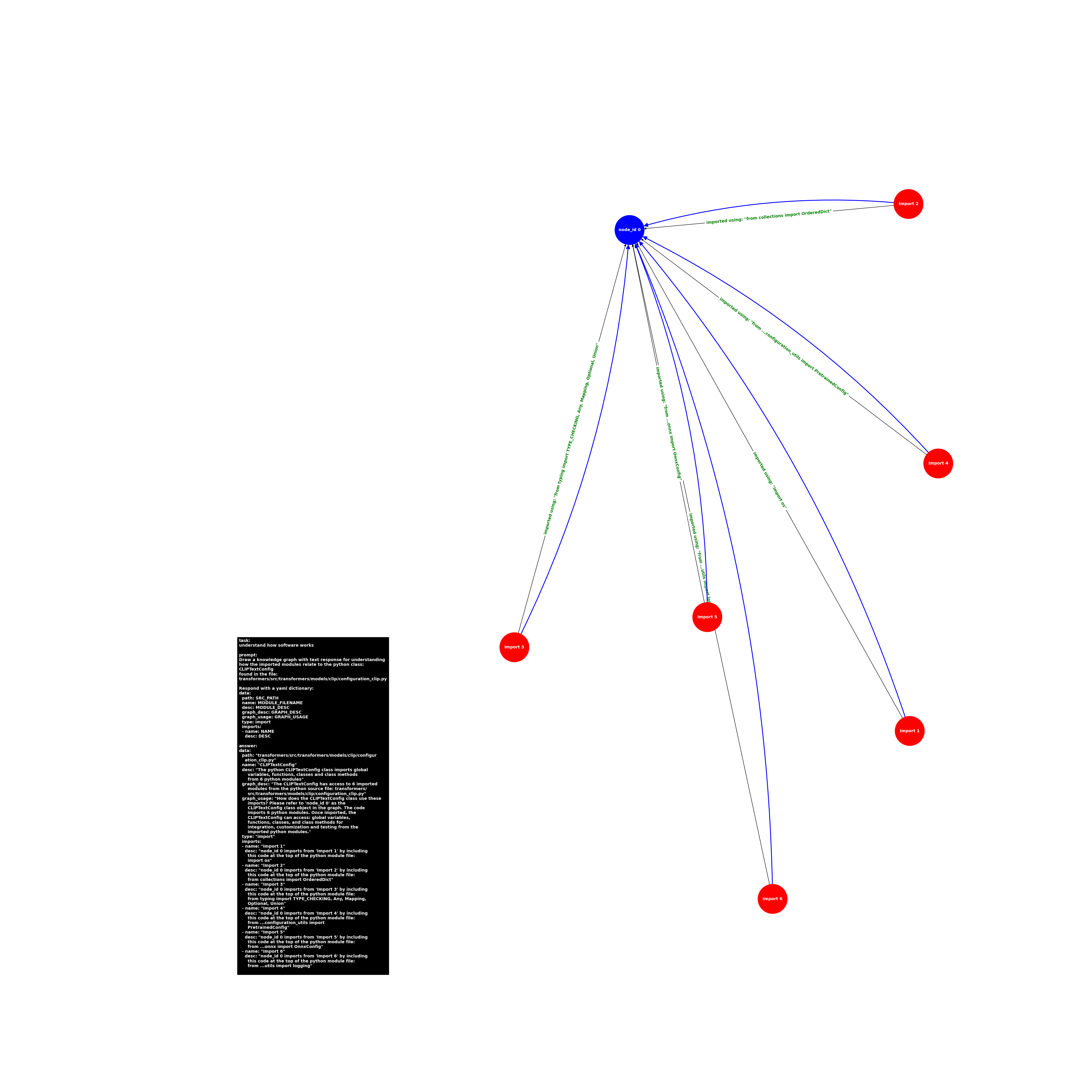

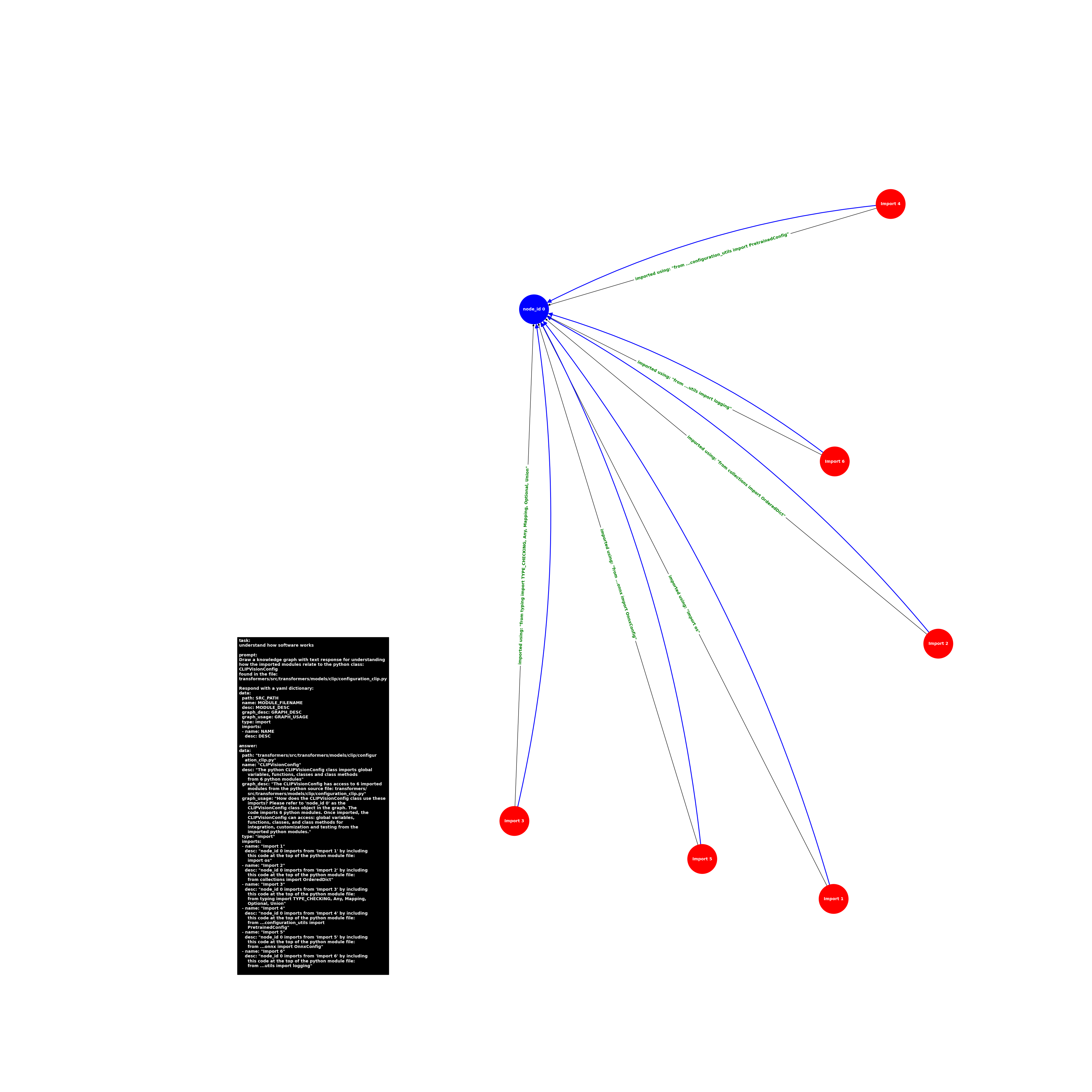

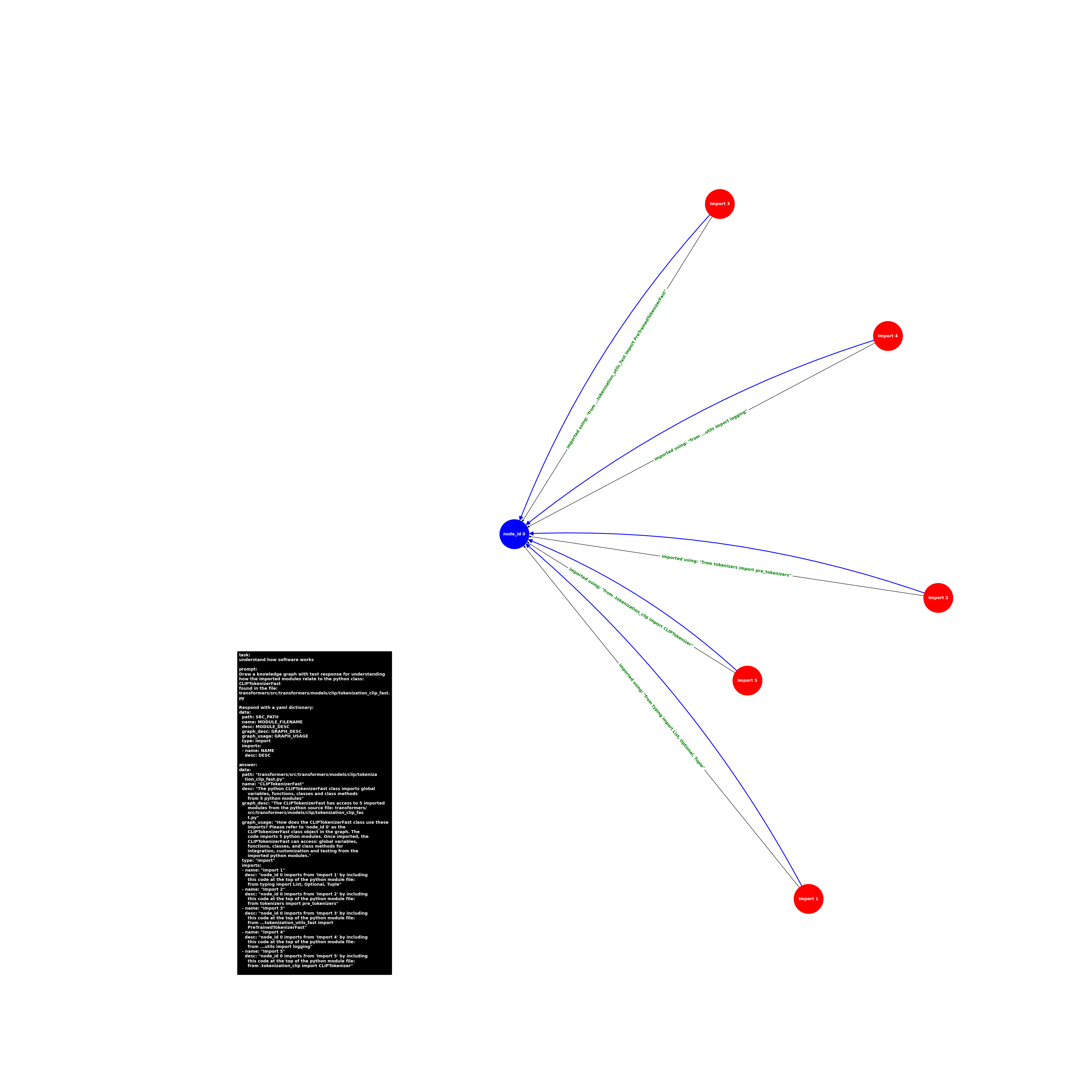

Here are samples from the python copilot imports image knowledge graph dataset (211 GB). These images attempt to teach how to use software with a networkx graph saved as a png with an alpaca text box:

How to use the transformers/src/transformers/models/clip/configuration_clip.py imports like the CLIPConfig class

How to use the transformers/src/transformers/models/clip/configuration_clip.py imports like the CLIPTextConfig class

How to use the transformers/src/transformers/models/clip/configuration_clip.py imports like the CLIPVisionConfig class

How to use the transformers/src/transformers/models/clip/tokenization_clip_fast.py imports like the CLIPTokenizerFast class

Below are extracted question and answer mp3 samples. Each mp3 is either a recording of the alpaca question or answer. Question mp3s use a different speaker than the answer mp3 voice.

Note: mobile browsers have issues playing the mp3s and show a question mark due to markdown failing to show the Listen link vs a confusing ? mark icon sorry!